ERA: A Goal-Based Agent Integrating LLMs and Ontology for Fake News Detection

Expert Reasoner Agent (ERA) Documentation

Project Overview

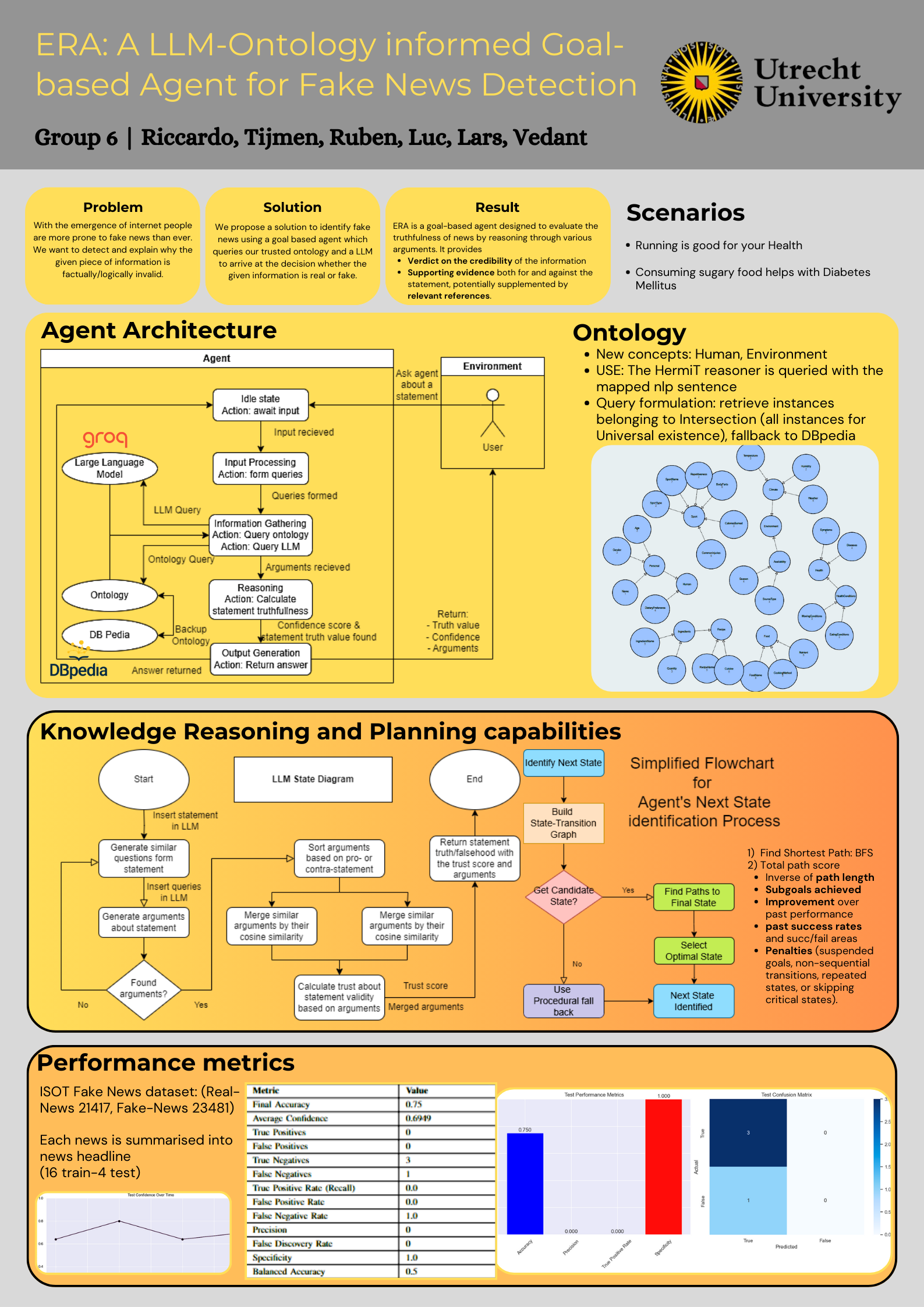

This project presents the ERA agent, an AI-powered system designed to verify the truthfulness of news headlines. By combining a trusted ontology and a large language model (LLM), the ERA agent offers a robust framework for evidence gathering and decision-making. The system leverages state-management techniques, structured queries, and performance metrics to provide reliable verdicts and confidence scores for user-provided statements.

Demo and Resources

Poster

Demo Video

Resources

Abstract

This project presents the Expert Reasoner Agent (ERA), an AI-powered system designed to verify the truthfulness of news headlines and generate supporting arguments. ERA combines a trusted ontology with a large language model (LLM) to provide a robust and comprehensive framework for evidence gathering, reasoning, and decision-making. By leveraging both structured ontological knowledge and the broad capabilities of the LLM, ERA ensures reliability while maintaining the flexibility to handle a wide range of topics.

The ERA system employs sophisticated state-management techniques to process user-submitted news statements through various stages, including input processing, information gathering, and reasoning. It uses structured queries to extract relevant data from the ontology, while also interacting with the LLM to generate and assess arguments for and against the statement. By integrating these two sources of information, ERA delivers reliable verdicts, accompanied by a confidence score that reflects the strength and consistency of the evidence.

Through its goal-oriented design, ERA not only aims to provide accurate fact-checking but also offers transparency by presenting both supporting and opposing arguments. This dual-source approach fosters a more nuanced and explainable decision-making process, making ERA a valuable tool in the fight against misinformation.

Goals and Challenges of AI-Based Fake News Detection

Goals

- Verdict with Supporting Arguments: ERA delivers a verdict on a headline’s truthfulness, presenting pro and contra arguments for transparency and understanding.

- Integration of Diverse Sources: Combines reliable ontological knowledge with the expansive capabilities of LLMs.

- User-Friendly Interaction: Simplified inputs and detailed outputs, including confidence scores, for ease of use.

- Transparency and Verifiability: Presents evidence-based conclusions to build trust and accountability.

- Continuous Learning: Adapts through dynamic state transitions and historical data analysis to improve over time.

Challenges

- Over-reliance on LLMs: Managing biases and hallucinations inherent in LLM-generated content.

- Ontology Limitations: Expanding the scope and keeping content updated for comprehensive coverage.

- Ethical and Privacy Concerns: Safeguarding user data and preventing misuse.

- Balancing Trust and Confidence: Integrating information reliability across sources for accurate scoring.

- Complex Planning: Ensuring robust state transitions in dynamic environments.

Architecture and Interactions

ERA adopts a goal-based architecture to dynamically plan, adapt, and respond to user inputs.

Goal-Based Design

- Main Goal: Deliver a truthful verdict with supporting arguments.

- Sub-goals: Execute tasks like querying the ontology, interacting with the LLM, and generating confidence scores through hierarchical goal management.

State Management System

- State Transitions: Defined states (Idle, Input Processing, Information Gathering, Reasoning, and Output Generation) guide ERA’s operations.

- Dynamic Decision-Making: ERA learns from past actions and adapts decisions using contextual data and metrics.

External Interactions

Ontology:

A trusted knowledge base organized across domains (e.g., health, environment). ERA uses SPARQL queries to extract structured information and validate user inputs.Large Language Model (LLM):

Provides broader contextual arguments using prompt engineering to structure responses. The agent processes outputs to merge similar arguments and calculate reliability scores.

Confidence Calculation

Trust scores from the ontology and LLM, combined with argument strength, are synthesized into a confidence score for each verdict.

Query Formulation and Argument Analysis with the LLM

The ERA agent’s interaction with the LLM follows a structured two-stage query formulation process, followed by multi-step argument analysis.

Query Formulation

- Generating Questions:

- Transforms the user statement into four questions, including two negations of the statement (avoiding the word “not”).

- Each question elicits varied perspectives to ensure comprehensive coverage.

Example:

- User Statement: “Daily coffee boosts productivity.”

- Generated Questions:

- Does daily coffee boost productivity?

- Is daily coffee a key factor in increased productivity?

- Does daily coffee hinder productivity? (negation)

- Is daily coffee’s impact on productivity overstated? (negation)

- Requesting Arguments:

- Each question is embedded in prompts instructing the LLM to provide concise arguments and assign reliability scores (1-10).

Example Prompt:

“Does daily coffee hinder productivity? (negation)” Present arguments concisely, focusing on evidence without speculation, and structure the response as evidence for or against the statement. Please give every statement a score from 1-10 on how reliable it is.

Argument Analysis

- Reliability Score Assessment:

- Evaluates the LLM’s assigned scores to gauge argument validity.

- Similarity Analysis and Merging:

- Tokenizes arguments, uses cosine similarity to detect redundancies, and merges similar ones, averaging their reliability scores.

- Verdict and Confidence Calculation:

- Aggregates arguments to determine whether the statement is true or false.

- Confidence levels are calculated using base trust scores (LLM: 0.8) and adjusted for argument support or contradiction.

The Role of the Ontology

The ontology underpins ERA’s fact-checking by providing structured and verified domain-specific knowledge.

Contributions

- Structured Representation: Organizes information for targeted queries.

- Domain Expertise: Offers nuanced insights within its scope.

- Trusted Source: Acts as a reliable counterbalance to the LLM.

- Supports Argumentation: Enhances LLM-generated arguments with verified facts.

Limitations

- Limited Scope: Coverage is restricted to predefined domains.

- Static Nature: Requires regular updates for ongoing relevance.

Comparison of Agent Architectures

| Characteristic | Reflex-Based | Utility-Based | BDI | Goal-Based (ERA) |

|---|---|---|---|---|

| Decision Style | Predefined Rules | Maximizes Utility | Action-Driven Intentions | Contextual Planning and Reasoning |

| Planning Capability | None | Short-Term Optimization | Impulsive Plans | Long-Term Causal Planning |

| Adaptability | Static | Moderate | Context-Aware | High |

| Transparency | Low | Moderate | Low | High (Evidence and Confidence Provided) |

| Use Case Fit | Simple, Static Scenarios | Optimization Tasks | Reactive Systems | Complex, Dynamic Real-Life Scenarios |

Why a Goal-Based Architecture?

ERA’s goal-based design supports dynamic decision-making, learning from prior interactions, and integrating multiple data sources. This architecture provides:

- Contextual Decision-Making: Adapts to new inputs and environments for flexible reasoning.

- Long-Term Planning: Prioritizes goal achievement with sequential, state-based planning.

- Transparent Reasoning: Delivers transparent outputs supported by evidence, ensuring user trust.

- Balanced Integration: Combines structured ontology data with the LLM’s expansive insights.

Conclusion

The Expert Reasoner Agent (ERA) demonstrates the effectiveness of a goal-based architecture in addressing the challenges of AI-powered fake news detection. By combining reliable ontological data with the contextual breadth of LLMs, ERA offers a robust solution for delivering transparent, evidence-based verdicts in complex and dynamic scenarios. ```